Domino-docker explained – Part 1: Why run Domino inside a container?

Introduction

For a long time I’ve been rather sceptical about running Domino inside a container. Containers are meant for microservices, aren’t they? And Domino is anything but a microservice. Nevertheless, when Ulrich Krause in 2017 wrote his blog article on how to create a Domino 9.0.1 FP10 container, I was intrigued. As I wanted to try it, I realised one of the potential downsides of running containers: No matter what operating system you use as base for your Domino server image, your container will use the kernel of your hosts OS. Domino 9.0.1 couldn’t handle my bleeding edge Linux kernel (I was running Fedora at the time), so that was the end of my first attempt to run Domino inside a container.

In November 2018, Thomas Hampel (at that time still working for IBM) created the domino-docker github repository as an open source initiative to create scripts that would make it easier to run Domino inside a container. Even though the repository was started by IBM, the work was done by the community with most of the work done by one man in particular: Daniel Nashed. He contributed his Linux start/stop scripts to the project, but also wrote scripts to completely automate the build of the images. This is necessary as HCL, like IBM in 2018, doesn’t allow the free distribution of Domino and it’s add-on products. This means we can’t just upload domino images to the Docker Hub. Instead you need to have access to the actual install packages in FlexNet and create your own container image.

In the past years, since HCL took over the development of Domino, we do see a more symbiotic relationship between the Domino-docker project and HCL’s Domino development. For example, a great idea in the project, like a variable-based setup of the Domino server, was translated in the one-touch setup with the config.json file, which was added to the core of Domino in Domino 12. This new feature was then used by the project to simplify their setup procedure. I really like seeing these kinds of symbiotic relationships between open source projects and HCL’s Domino development.

At the end of 2020 I decided to give Domino on Docker another try. My direct reason was that my home server was a bit limited in resources and containers are more efficient resource wise than virtual machines. I discovered that there are many more good reasons to use Domino inside a container, but I’ll get to that later. I had rebuilt my server on CentOS 8 Stream, so the kernel problem was solved. While working with the scripts, I realised two things:

- Daniel has built fantastic scripts to both build and run Domino containers

- With so much functionality added, the project didn’t manage to document this new functionality in detail

With help from Daniel, I managed to build my own customised container and I experienced in the past months all the benefits from running Domino as a container, combined with the scripts from the Domino Docker project. However, if this project wants to get the attention it deserves, the documentation needs to be fixed and this is exactly what I’ll try to do in a series of 6 articles:

Part 1 : Why run Domino inside a container

Part 2 : Creating your first Domino image

Part 3 : Running your first Domino server in a container

Part 4 : The domino_container script

Part 5 : Adding addons on top of your Domino image

Part 6 : Creating your own customisations

Why run Domino inside a container

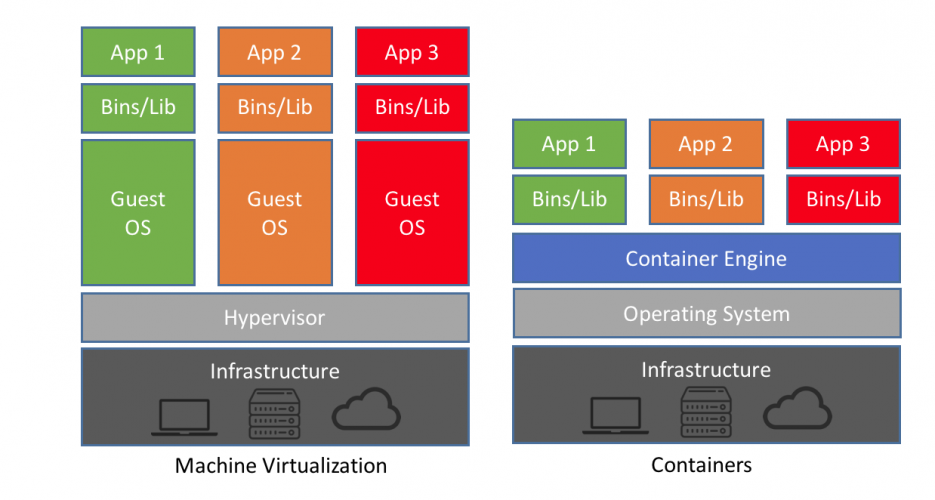

When we talk about containers, we usually look at them as opposite to virtual machines, as in the picture below. In this case containers are far more resource conscious, as they save both the system resources and the maintenance of an extra Guest OS.

However, with Domino it doesn’t work like this. As Domino consists of many services which all need their own ports, you need a separate IP address per Domino server. Theoretically you could arrange this and there are business partners who offer their Domino servers as containers through a Kubernetes cluster, but the scripts from the Domino docker project assume that you run one Domino server per OS. This means that running Domino as a container introduces an extra layer between the operating system and the Domino server. This is not new to Domino as the Java server controller that is used by default on Windows basically does the same, but the question remains why you would want this on Linux? I present 3 reasons:

- Standardisation

- Portability

- Upgradability

Let me clarify.

Standardisation

When I’m working on a Domino server for one of our customers, I can usually see from the way it’s set up who built the server. The way start/stop scripts are set up, location of directories. security settings etc. often differ per server. Also, whether the Domino program directory is in /opt/ibm or /opt/hcl (or sometimes even /opt/lotus) is usually a dead giveaway of the age of the server. It’s a fun guessing game where everything you need is located on a particular server, but not when you’re in a hurry. Also, I’m confronted with a plethora of Domino versions. Some customers are still on Domino 9.0.1 FP10, Others on Domino 10.0 while we also have customers with servers on Domino 11.0.1 FP1, FP2 and FP3. How cool would it be if all servers were built in exactly the same way. You always know which paths were used and how to open the console? How nice would it be if servers with a specific Domino version all were on the same fix pack version? This also makes it easy to have the exact same setup on your development servers, test servers and production servers and to create universal documentation for all environments!

Just to be clear, you can still customise your server to a certain degree. Add custom libraries for specific cases, make sure that the directory for your transactional logs is on a different server from your Domino data directory etc. But the base is always the same and that is a big advantage.

Portability

Who has never used a portable app? A lot of software offers a portable version these days, where you don’t have to run an installer before you can use the software. Domino as a container is Domino’s version of a portable app. No installation required. Just copy the Domino data directory to the server where you want to run your server. Make sure this server has a container engine. Copy the container configuration file to /etc/sysconfig, and you can start your server.

Upgradability

Domino has a great track record in upgradability, where you can upgrade a server from Domino 9.0.1 to Domino 11.0.1 FP3 in less than an hour, However, if you have to upgrade 10 servers, that’s still more than a days work. As a result we see a lot of versions of Domino lingering about. As said earlier, with our customers we see all variants of Domino 11, multiple versions of Domino 10 and some even on Domino 9. How about upgrading a server in less than a minute?

With the scripts of the domino docker project, you can build an image of a particular version within minutes (on my server it takes less than 6 minutes to build an image with for example Domino 11.0.1 FP3). You can upload this version to a docker repository on your intranet. This is a one-time action per version. When this is done, upgrading a Domino server consists of just 2 commands:

docker pull <localhost or your own docker repository>/hclcom/domino domino_container update

Replace ‘docker’ by ‘podman’ if that’s the container engine you use. The first command downloads the latest version of your Domino image to the local machine. This command takes, depending on network speed etc, about 20 seconds. The second command updates the Domino container by stopping Domino, removing the container and creating a new container based on the image. This takes less than 40 seconds on an average Domino server. So there you go. Upgrading a server in less than a minute. Suddenly, time is no longer a reason to postpone an upgrade.

If these reasons convinced you to try out Domino on Docker, click here to learn how to create your first image.

Very nice! …thanks a lot for writing this series! :-)..looking forward to the rest of the series